The Vision: Asking Questions, Getting Visualizations

- Natural Language Querying (NLQ): Allow users to type questions in plain English to generate relevant charts and data visualizations.

- Intelligent Suggestions: Proactively offer insights or suggest relevant analyses based on the user's data and context.

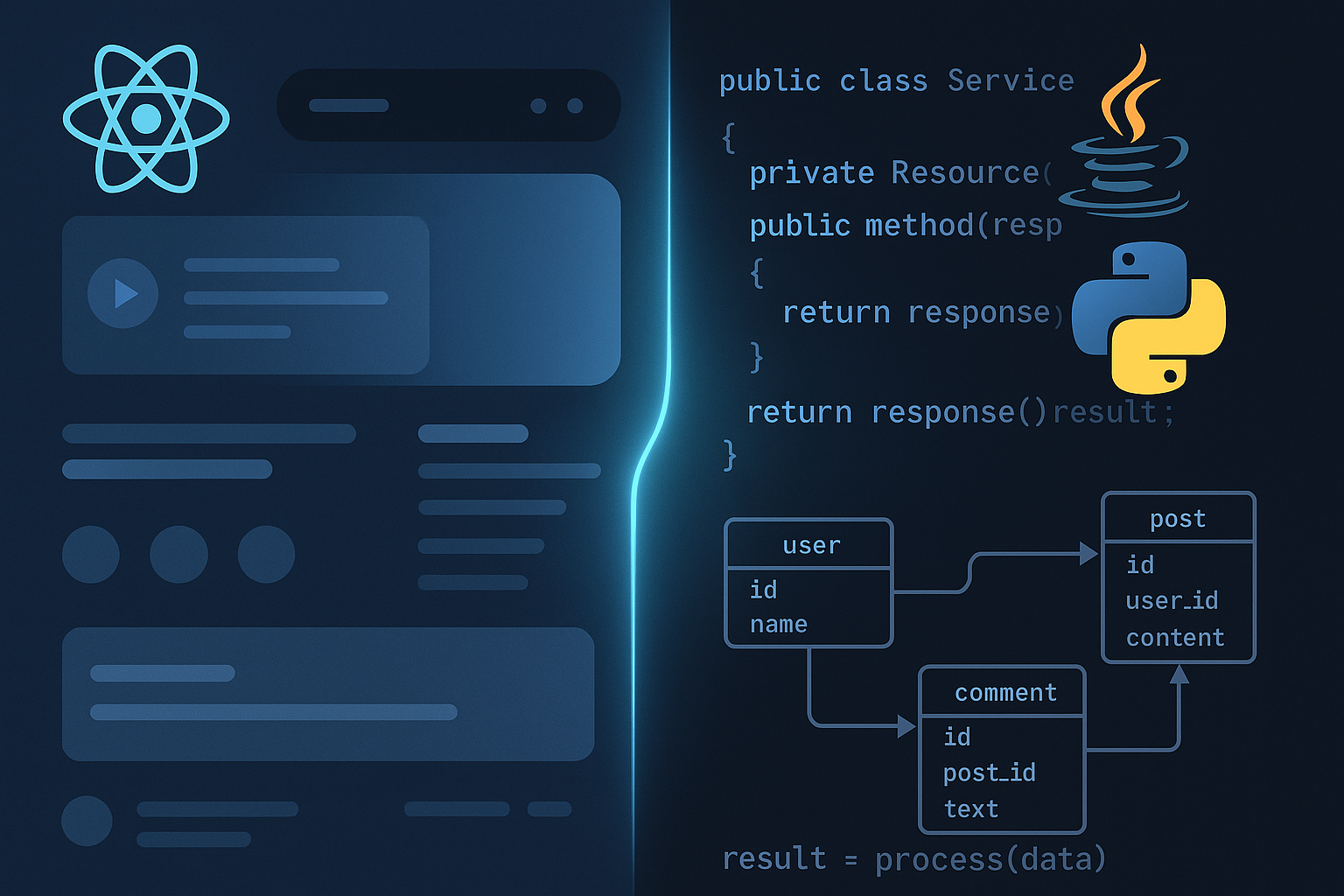

The Tech Stack: Python, LangChain, and OpenAI

- LangChain: This powerful framework became the backbone for orchestrating interactions between our application, the LLM, and our data sources. It allowed us to build "agents" capable of understanding user intent, planning steps, interacting with tools (like our database query functions), and generating responses.

- OpenAI (Gemini Models): We utilized Google's powerful Gemini models as the core intelligence engine. Their strong natural language understanding and generation capabilities were essential for interpreting user queries and formulating insightful responses or visualization configurations.

- Python: The natural choice for AI/ML development, providing robust libraries and seamless integration with LangChain and OpenAI's SDK.

Building the AI Agent: Key Steps & Challenges

- Intent Recognition: The first step was teaching the agent to understand the user's goal. Was the user asking for a trend, a comparison, a breakdown? This involved careful prompt engineering and potentially fine-tuning models on domain-specific examples.

- Tool Creation (LangChain Tools): We defined "tools" that the LangChain agent could use. A key tool was one that could translate the understood intent into a structured query (like SQL or parameters for our visualization engine) to fetch the necessary data.

- Data Fetching & Formatting: The agent needed to execute the query, receive the data, and format it appropriately for the visualization component (developed in Next.js).

- Visualization Generation: Based on the query and results, the agent (or associated logic) determined the most suitable chart type (bar, line, pie, etc.) and configured it for display.

- Handling Ambiguity & Errors: Natural language is inherently ambiguous. We had to build mechanisms for the agent to ask clarifying questions or gracefully handle queries it couldn't understand or execute.

- Prompt Engineering: Continuously refining the prompts given to the LLM was crucial for accuracy, relevance, and ensuring the agent stayed within its designated capabilities.

from langchain.tools import BaseTool

from typing import Type

from pydantic import BaseModel, Field

# Assume existence of a function that takes structured query and returns data/viz config

def generate_visualization(query_params: dict) -> dict:

# ... interacts with backend data/viz engine ...

print(f"Generating visualization based on: {query_params}")

return {"chart_type": "bar", "data": [ ... ], "title": "Sales by Region"}

class VizQueryInput(BaseModel):

intent: str = Field(description="The user's goal, e.g., 'sales trend last quarter by region'")

# Add other fields needed to structure the query

class VisualizationTool(BaseTool):

name = "VisualizationGenerator"

description = "Useful for when you need to generate a data visualization based on a user query about business data."

args_schema: Type[BaseModel] = VizQueryInput

def _run(self, intent: str, **kwargs) -> dict:

# 1. Translate intent (+kwargs) to structured query_params

query_params = {"sql_equivalent": f"SELECT region, SUM(sales) FROM sales_table WHERE date >= '...' GROUP BY region", "chart_hint": "bar"} # Simplification

# 2. Call the actual visualization generation function

viz_config = generate_visualization(query_params)

return viz_config # Return config for frontend

async def _arun(self, intent: str, **kwargs) -> dict:

# Implement async version if needed

raise NotImplementedError("VisualizationTool does not support async")

The Impact: Democratizing Data

- Lowered the barrier to entry for data analysis.

- Accelerated insight discovery.

- Reduced manual effort for report/chart creation significantly (by ~90%).

- Created a more engaging and intuitive user experience.

Editor's Note: This post from early 2024 details our initial work in applying LLMs to BI. This foundational experience paved the way for the more advanced system described in my deep-dive on Building Agentic RAG Systems That Scale.